The MultiSpin.AI project recently took part in a BIU seminar on December 11th 2025 featuring an in-depth talk by Konstantin Zvezdin (SpinEdge). The presentation, entitled “Spintronics Beyond MRAM: From Data Storage to Computing”, offered a comprehensive perspective on how spintronic technologies are evolving from established memory solutions toward a new class of energy‑efficient computing paradigms.

From Memory Elements to Computational Primitives

Spintronics has long been associated with magnetic data storage and the commercial success of MRAM. However, recent advances in device physics are reshaping this view. The seminar reframed spintronic devices not as passive memory components, but as active computational primitives capable of supporting analog computation, probabilistic processing, and physics‑driven AI acceleration.

At the device level, the talk introduced the key physical mechanisms underpinning this transition, including spin‑orbit torque (SOT), interfacial anisotropy engineering, and thermal stochasticity. These effects, already exploited in MRAM, also form the foundation for the next generation of spin‑based computing devices.

Analog In‑Memory Computing with Spintronic Synapses

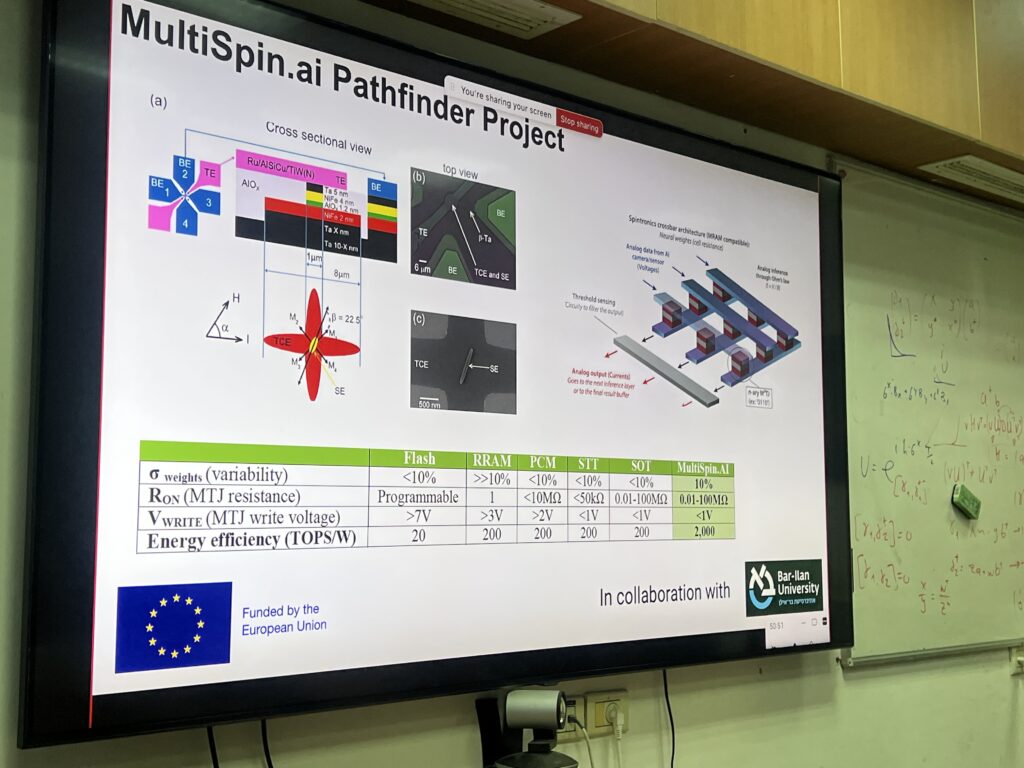

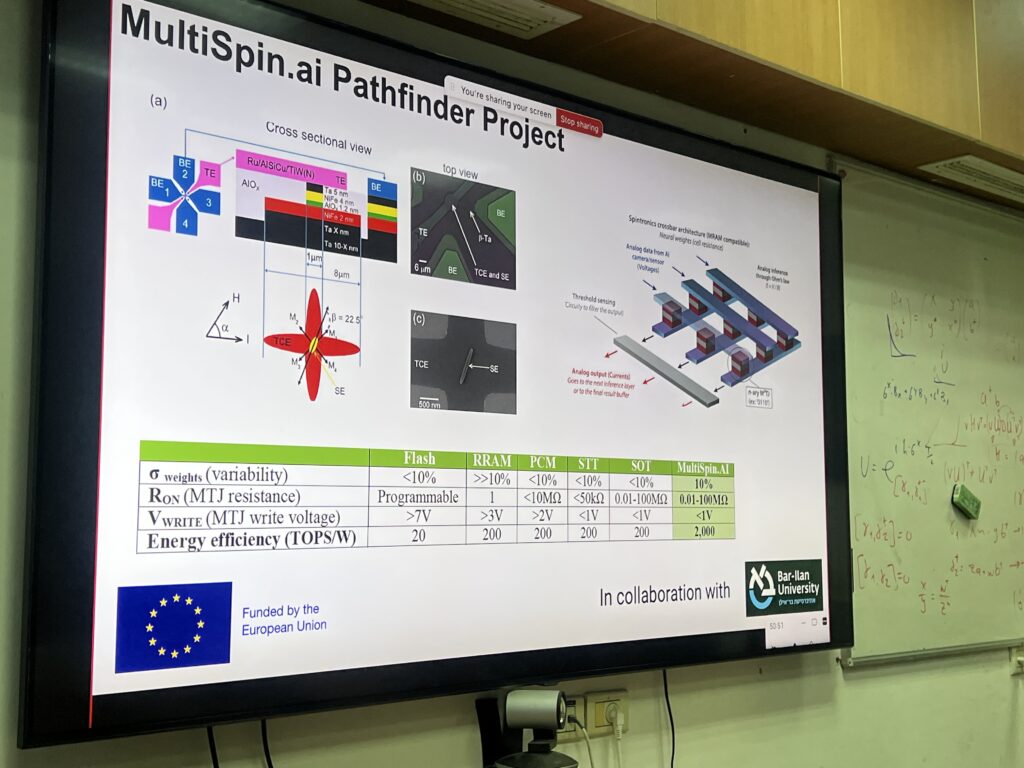

A central theme of the presentation was the development of multilevel SOT‑MRAM synapses. These devices enable stable and tunable conductance states while maintaining high on‑chip density and ultra‑low leakage. Such properties make them ideal building blocks for analog in‑memory computing, where computation is performed directly within the memory array.

By using spintronic crossbar architectures, vector–matrix multiplication—a core operation in AI workloads—can be executed directly in hardware. This approach promises orders‑of‑magnitude reductions in energy consumption compared to conventional digital computing, addressing one of the key bottlenecks in modern AI systems.

Scalable Architectures for AI Workloads

Moving beyond individual devices, the seminar presented architectural strategies for scaling analog multiply‑accumulate (MAC) arrays—from 16×16 tiles up to arrays approaching 2k×2k. Topics such as adaptive quantization, device variability compensation, and binary‑tree routing were discussed as essential enablers of large‑scale deployment.

Importantly, the proposed methodology allows pre‑trained AI models to be mapped onto analog spintronic arrays without retraining, while still maintaining accuracy within a few percent of digital baselines. This capability significantly lowers the barrier for adopting analog hardware accelerators in real‑world AI applications.

Probabilistic Computing and Quantum‑Inspired Approaches

In addition to deterministic analog computation, the talk highlighted a complementary direction: probabilistic computing using stochastic magnetic tunnel junctions (MTJs), also known as p‑bits. Rather than suppressing thermal noise, these devices actively exploit it to naturally implement Boltzmann samplers and Ising‑type inference engines.

Such probabilistic hardware can act as quantum‑inspired accelerators, offering efficient solutions for optimization and inference problems. When combined with analog crossbars, these systems enable hybrid deterministic–stochastic computation, opening new possibilities for AI and neuromorphic architectures.

Toward a Unified Spintronic Computing Stack

The seminar also touched on experimental and theoretical work on broadband spin‑torque rectification in MTJs, demonstrating GHz‑range, field‑free detection. These results are particularly relevant for sensory front‑ends in embedded and edge‑AI systems, where low power consumption and compact integration are critical.

Overall, the presentation outlined a vision of a unified spintronic computing stack, spanning device physics, circuit architectures, and AI model adaptation. In this context, spintronics moves decisively beyond non‑volatile memory and emerges as a key enabling technology for post‑CMOS, physics‑native computing, supporting energy‑efficient, off‑grid AI inference.

Relevance for MultiSpin.AI

The topics presented at the BIU seminar strongly align with the objectives of the MultiSpin.AI project, which aims to explore novel spintronic concepts for next‑generation AI hardware. Insights from SpinEdge’s work directly contribute to advancing scalable, low‑power, and intelligent computing solutions that bridge fundamental physics and real‑world AI applications.

MultiSpin.AI thanks Konstantin Zvezdin and SpinEdge for the insightful presentation and BIU for hosting the seminar, fostering valuable exchange between research, technology development, and system‑level innovation.